Theme

Medical Physics

INSTITUTION

Al-Neelain University

Princess Nourah Bint Abdulrahman University

In this study, we propose a machine learning (ML) framework based on artificial neural networks (ANN) to generate a synthetic T2-weighted magnetic resonance imaging (MRI) sequence from a T1-weighted sequence.

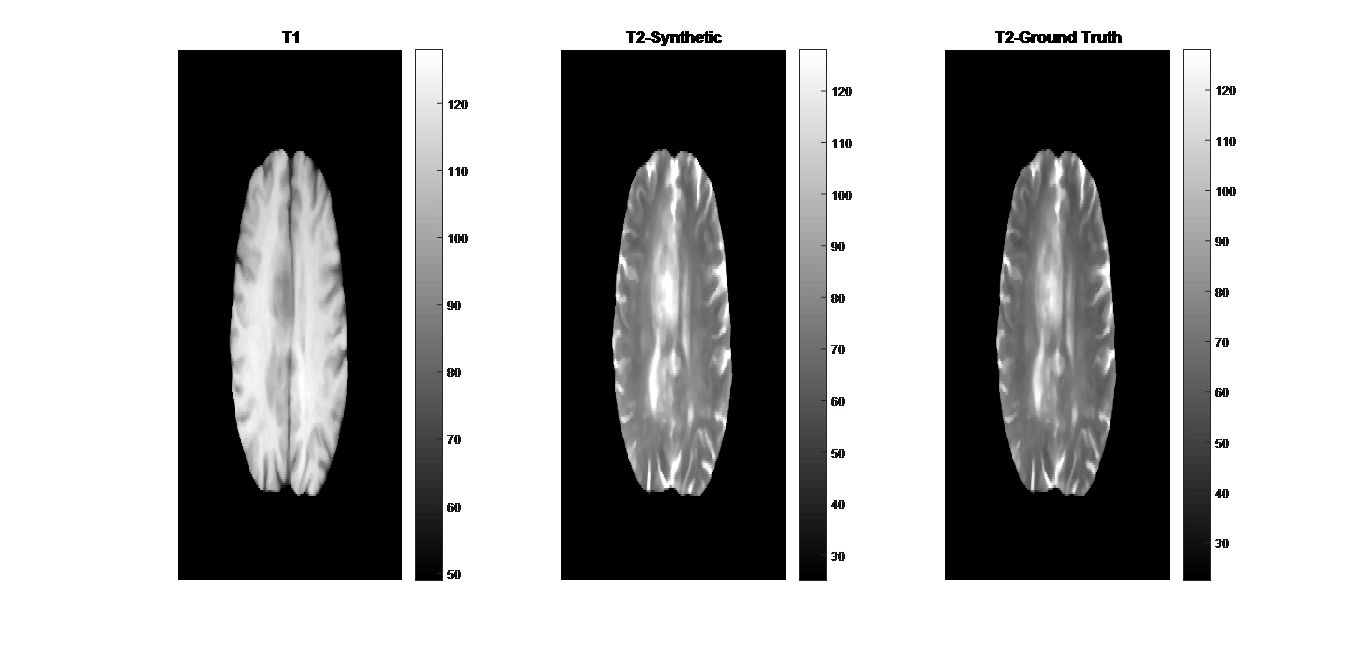

Fig. 1: Synthetic T2-weighted MR image generated from the T1-weighted image with the ANN model. (left) T1-weighted MR image, (middle) synthetic-T2-weighted MR image, and (right) the ground truth T2-weighted MR image.

Fig. 1: Synthetic T2-weighted MR image generated from the T1-weighted image with the ANN model. (left) T1-weighted MR image, (middle) synthetic-T2-weighted MR image, and (right) the ground truth T2-weighted MR image.

The average value of the MAE for the synthesized T1-weighted images was 4.340.

The mean values of the PSNR and SNR were -14.328 and 18.861, respectively.

The SSIM mean value was 0.991, in which the synthetic T2-weighted MR images/sequences closely matched the ground truth T2-weighted MR images/sequences.

The maximum MSE value obtained during the model validation of 1508.136 (root MSE of 38.835).

The correlation coefficient was excellent having a value 0.981 during the training, validation, and testing.

- Five sets of MRI sequences, every set consists of both T1-weighted and T2-weighted, for brain collected from multiple institutions were used in this study. An ML-based framework using ANN was developed to generate a synthetic T2-weighted MR image/sequence from the T1-weighted MR image/sequence.

- The model was trained on 70% of the data, and validated and tested on the remained 30% of the data. Given an input image/sequence of T1-weighted MR to the trained ANN model, a synthetic T2-weighted MR image/sequence will be produced.

- The model accuracy was evaluated using the mean squared error (MSE), mean absolute error (MAE), regression plot, peak signal-to-noise ratio (PSNR), and structural similarity index (SSIM) were performed.

- We demonstrated an ML-based framework for generating a synthetic T2-weighted MR image/sequence from the T1-weighted image/sequence.

- The ANN model indicated high accuracy by producing synthetic T2-weighted MR images similar to the ground truth ones.

- This framework can help improve the quality and versatility of multi-parametric MRI sequences and eliminates the need for acquiring the two MRI sequences.

- Hagiwara A, Otsuka Y, Hori M, Tachibana Y, Yokoyama K, Fujita S, et al. Improving the quality of synthetic FLAIR images with deep learning using a conditional generative adversarial network for pixel-by-pixel image translation. Am J Neuroradiol. (2018) 40:224–30. doi: 10.3174/ajnr.A5927

- Wang G. OneforAll: improving synthetic MRI with multi-task deep learning using a generative model. In: ISMRM MR Value Workshop, Edinburgh, UK (2019).

- Nie D, Trullo R, Lian J, Wang L, Petitjean C, Ruan S, Wang Q, Shen D. Medical Image Synthesis with Deep Convolutional Adversarial Networks. IEEE Trans Biomed Eng. 2018;65(12):2720-2730. doi: 10.1109/TBME.2018.2814538.

Send Email

Send Email